Google's Guide to Prompt Engineering

TLDR

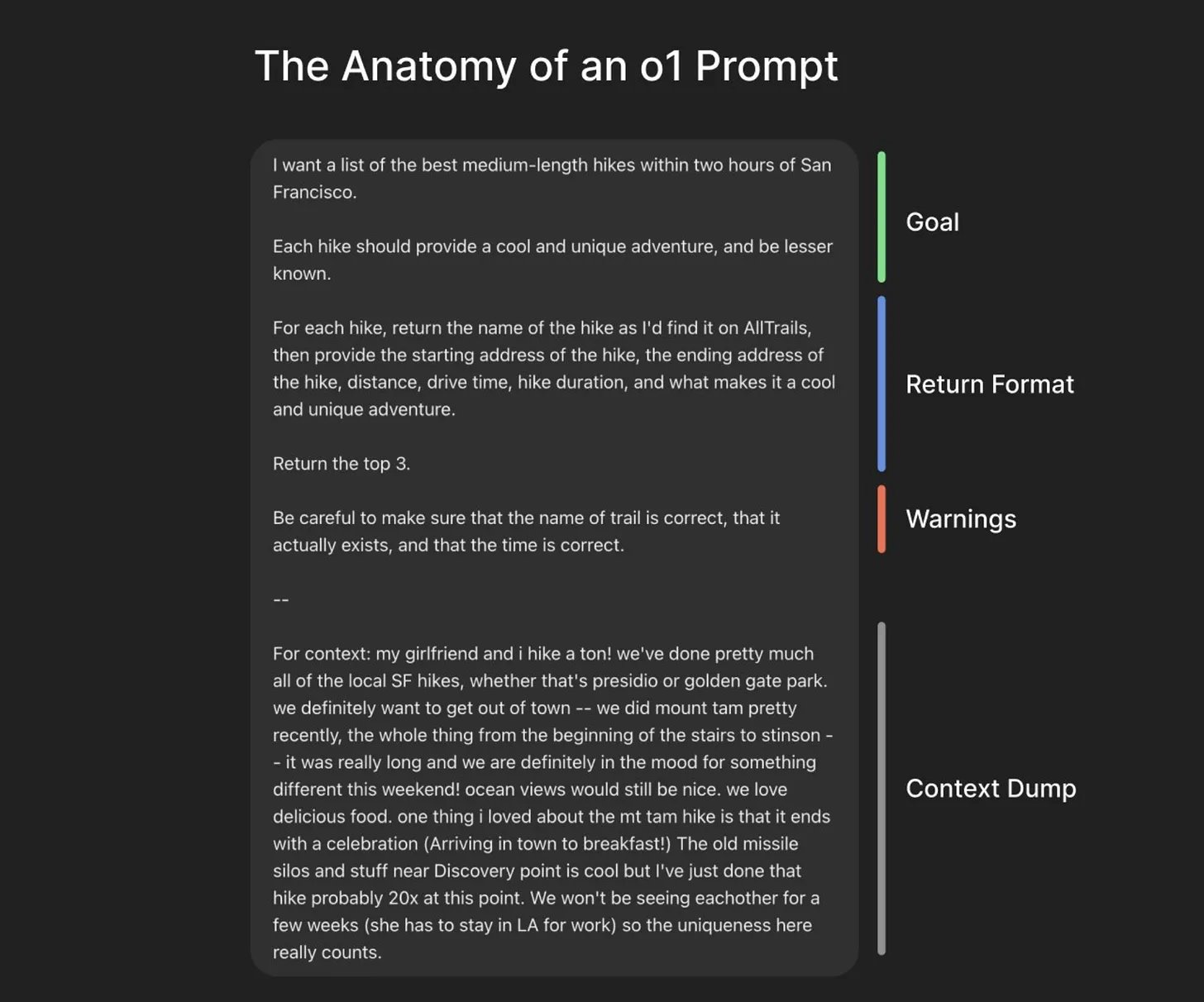

When it comes to prompt engineering, I prefer straightforward commentary from OpenAI and Anthropic - clear, concise, actionable.

Comparing OpenAI and Anthropic Prompting Frameworks

Before diving into Google’s approach, here’s how OpenAI and Anthropic structure their prompting advice, setting the stage for why we favor their methods:

| Aspect | Greg Brockman’s Guide (OpenAI) | Anthropic’s Guidance (Claude) |

|---|---|---|

| Core Goal | State your goal clearly with specific details to avoid vague outputs. Example: “Compare Arabica vs. Robusta coffee by taste, caffeine, and brewing methods.” | Clearly describe the task in natural language, treating Claude like a helpful assistant. Example: “Summarize the key causes of the French Revolution in three paragraphs.” |

| Output Structure | Specify the desired return format (e.g., bullet points, table, summary). Example: “List hikes with names, distances, and durations in a table.” | Use structured prompts, often with XML tags, to organize tasks and outputs. Example: “ |

| Constraints | Set warnings and guardrails, like accuracy checks or content to avoid. Example: “Ensure hikes exist on AllTrails, exclude any over 10 miles.” | Define boundaries (e.g., avoid jargon, limit length) but avoid overly negative instructions due to “reverse psychology” effect. Example: “Keep the summary concise, under 200 words.” |

| Context Provision | Provide relevant background (e.g., audience, purpose) to tailor responses. Example: “I’m a beginner coffee drinker with a basic coffee maker, preferring mild flavors.” | Include context like audience or purpose, often within structured prompts. Example: “Write a marketing email for tech-savvy millennials, casual tone.” |

| Iteration | Iteratively refine prompts based on AI output to improve accuracy and relevance. General Approach: Tweak prompts after reviewing responses. | Emphasize iteration with tools like Prompt Improver and chaining prompts for complex tasks. Example: Break a research task into steps, refining each prompt. |

| Unique Features | Streamlined four-pillar framework (Goal, Format, Constraints, Context) for universal application. | Modular approach with XML tags, few-shot prompting (examples), and a Prompt Library for templates. Example: Provide a sample summary to guide output style. |

| Source | Derived from Brockman’s talks and OpenAI’s engineering practices (e.g., Ben Hylak’s framework). | Spread across Anthropic’s docs, Console, Prompt Engineering Tutorial, and Prompt Library. |

This table highlights why we prefer OpenAI’s concise checklist and Anthropic’s flexible, Claude-specific tools, but Google’s guide—explored next—brings its own strengths to the table.

Google's prompting advice

Fair enough to Google, getting LLMs like Gemini to do what you want often feels like an art form. But I'm not sure that justifies the 68 page long whitepaper, anyways, here is summary:

Start Simple, Be Specific

Often, the most effective prompts are surprisingly straightforward. If a simple instruction or question (known as "zero-shot" prompting) gets you the desired result, don’t overcomplicate things.

However, clarity is crucial. Vague prompts lead to vague answers. Be specific about what you want the output to look like, the format, the length, and the style. Using clear action verbs (like "Summarize," "Classify," "Generate," "Translate") helps guide the model effectively. Interestingly, research suggests telling the model what to do is often better than telling it what not to do.

The Power of Examples (Few-Shot Prompting)

When zero-shot isn’t enough, providing examples is one of the most powerful techniques. Showing the model one ("one-shot") or a few ("few-shot") examples of the desired input/output pattern helps it understand the task and mimic the format or style you need.

For few-shot prompting:

- Use at least 3-5 high-quality, diverse examples.

- Ensure examples are directly relevant to your task.

- Include edge cases if you need robust handling.

- For classification tasks, mix up the classes in your examples to avoid accidental bias.

Know Your Techniques

Beyond basic prompting and examples, several advanced techniques can tackle more complex reasoning or tasks:

- Role & Context: Assigning the LLM a role ("Act as a travel guide") or providing specific context ("You are writing for a blog about retro games") helps tailor its tone, style, and knowledge.

- Chain of Thought (CoT): Ask the model to "think step by step" before giving the final answer. This dramatically improves performance on tasks requiring reasoning, like math or logic problems. Combining CoT with few-shot examples is particularly powerful.

- Step-Back: Have the LLM first answer a broader, more general question related to the task before tackling the specific request. This helps activate relevant knowledge.

- ReAct: This technique allows the LLM to reason and act by interacting with external tools (like search). It follows a thought-action-observation loop, useful for tasks needing up-to-date information or external computation.

The Takeaway

I'm going to stick to OpenAI & Anthropic guide, it's just more straightforward.